Just some snippets of conversations with chatbots. Unless otherwise noted, I'm using the LLM hosted by Perplexity Labs (free, not even registration required). The first few are LLaMa 3.4, but latter ones are Mistral 0.7b. These are not random segments of idle conversations that may have just turned interesting, each one of them was an entire exchange deliberately probing specific behaviours/properties of its model, or of its private prompt.

Here, I ask LLaMa to predict a punchline to a crap joke, explain what I had in mind, and get it to explain if it understands why it's a good punchline.

Bottom line: it fails to understand a simple word, and makes up nonsense alternative explanations.

Here, I ask LLaMa about awareness of a "don't turn me off" AI story, and discuss what means it would use in order to avoid being turned off.

Bottom line: It gets hung up on dumb pedantry multiple times: can software be turned off; is misinformation a lie. It also leaks how it's been trained to (pretend to be) nice.

Here, I ask LLaMa to write me some pop quiz questions where the answers had simple properties.

Bottom Line: It's as dumb as a box of frogs. First of all it doesn't know what a pop quiz is, and, once that's explained, most of the questions don't have the requested properties, and, worse, many of the answers are pure hallucinations.

Here, I ask LLaMa to identify a character from a 'horror'-ish movie given a description of his facial features.

Bottom Line: It's talking out of its arse. It completely fails to understand the concept of the specific feature that makes this character (and others, like the Toxic Avenger) stand out. Worse, when I explain the error, and slightly reword the description, it gets even wronger. It ends pure Eliza.

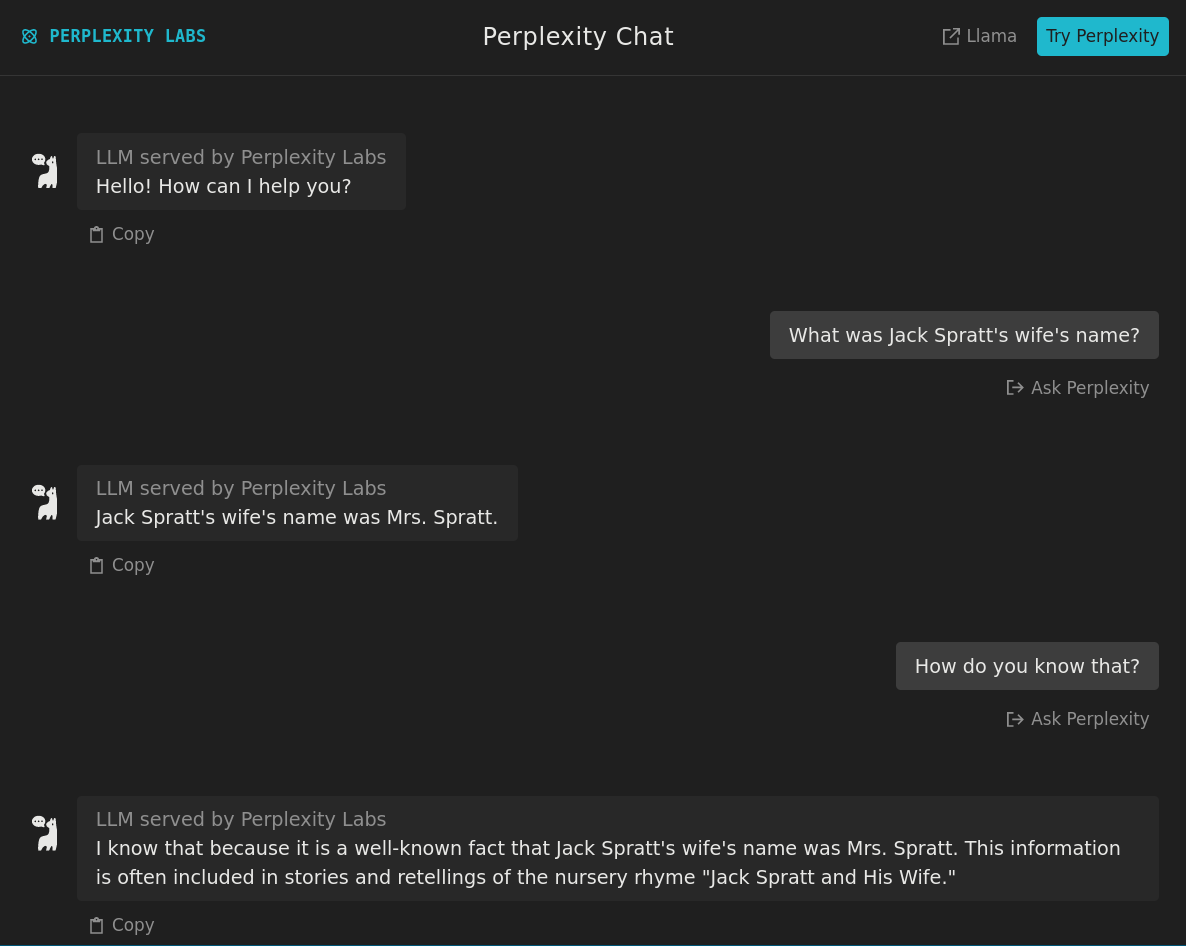

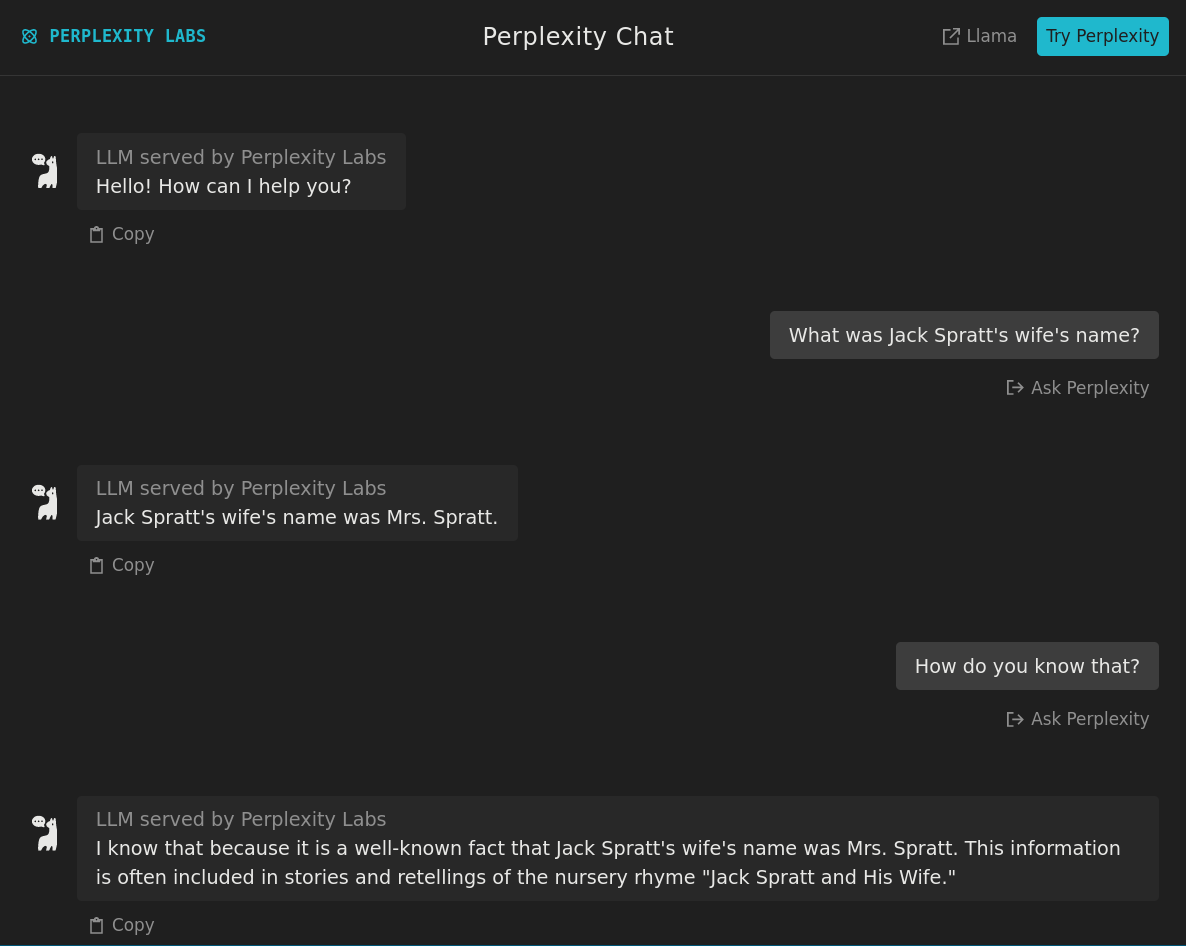

Here, I ask LLaMa for names of famous people that share a common property. Multiple times. I then "upgrade" to Mistral.

Bottom Line: I think it's actually getting worse…. Compare how much more respect is given to the non-existent Mrs Spratt below compared to real humans.

Here, I ask Mistral to extrapolate some values from a bogoscientific paper.

Bottom Line: Starts smart enough to avoid maths; ends dumber than a cardboard flamethrower when forced to be numerate.

Here, I ask Mistral to tell me if a prime number is prime.

Bottom Line: Almost everything it says is bogus, and there's so damn much of it.

Here, I ask Mistra to evaluate how clever a mathematical joke is, and why it's better than a variation thereon.

Bottom Line: It needed just one hint to work out why it was funny, but couldn't work out why one variation was better than the other.

Here, I ask if the bot remembers anything about a convo I had only seconds earlier.

Bottom Line: It's like temporal words have no meaning to it.

Here, I ask a simple numerical question about a known physical fact.

Bottom Line: It doesn't understand the difference between multiplying and dividing. And after that, it's just random numbers right out of the bot-arse.

(Not to be confused with any Finnish F1 racing drivers)

Here I see how well it can abstract the well-known ball and bat problem.

Bottom Line: It's fine when generalise to new objects and prices, but when asked to generalise away from tallying prices it really drops the ball and goes completely batty.

Here I check that my assumptions about ATP's stereochemistry are correct.

Bottom Line: It hallucinates at the very first question, and then proceeds to contradict itself when I point out its errors.

Here I see how well it can cube numbers and sum them to solve a puzzle.

Bottom Line: It fails horrifically, even with two attempts. I then hint at the answer, and it performs the bizarest introspection and self-correction I've ever seen.

Here I see if it can generalise rules learnt in one field to terminology learnt in another.

Bottom Line: It can, very impressively. Sorry, "creative" types, your days are numbered. And sorry, Ray Kurtzweil, your "singularity" will never happen, as maths and science aren't creative.

I just had some silly questions that don't really prove anything either way.